The Hybrid Lab is not only home to events and seminars with interdisciplinary focus, but can also be used as laboratory for scientific or artistic experiments. Melanie Irrgang from the TU Berlin used our venue for her research at the intersection of technology and rhythmic movement to music.

Music is often discussed to be perceived as emotional because it renders expressive movements into audible musical structures. Moreover, music listening experiences and music preferences are influenced by the movement and the emotional connotations it evokes. Thus, a valid and innovative approach to retrieve and recommend music could be to query it in a corporeal way. The increasing meaning of smartphones and the mobile listening habits suggest smartphones and their inherent motion sensors to be the ideal instrument to implement this new exploratory access to music.

Under the title “From Motion to Music: Towards Integrating Embodied Music Cognition into Music Recommender System” I examine the discriminative power of mobile-device generated motion sensor data produced by gestures to predict corresponding qualities of music.

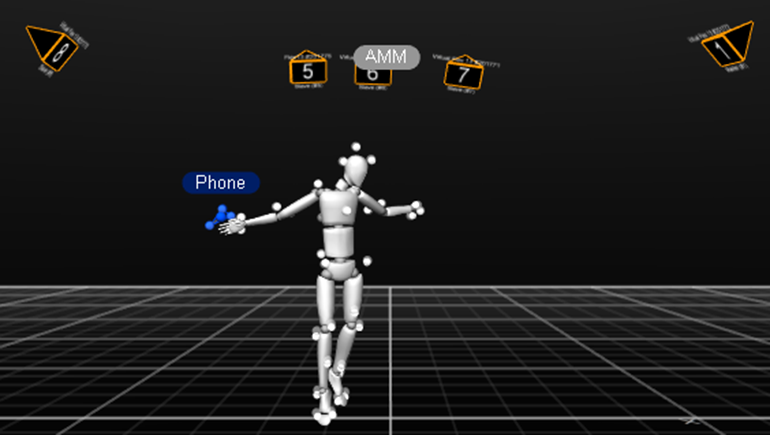

During the first study participants were tracked by motion capture while moving a smartphone to the music. It showed how rhythmic and melodic qualities of music (ranging from Stravinsky to Sia) influenced the posture of participants, the rhythmicity of movements and their use of space. While the first study pursued a design related to music psychology in order to understand how music evokes movement, the second study will transfer the outcomes to the field of computer science and music information retrieval. An online study will be conducted to evaluate how well the findings from the first study scale to a big set of participants and how suitable they were technically implemented for a real music recommendation scenario.

Therefore, these findings will contribute to understand, how contemporary movement and music refer to each other on the one hand and on the other hand, how these findings can be applied to integrate embodied music cognition into music recommender systems.

– Melanie Irrgang

Melanie Irrgang holds a degree in computer science (M.sc. Informatik) from TU Berlin. She is writing her phD in the fields of embodied music cognition and music information retrieval with the Audio Communication Group (TU Berlin). Her interest in this topic comes from her being a bass and piano player as much as a dancer. Besides she is working as a research associate in the field of computer linguistics at GFaI e.V. where she develops tools to facilitate citizen science projects.